How big tech can anticipate its responsibilities

11/10/2017

“The Circle” is a powerful albeit dystopian novel by the American writer Dave Eggers, about an eponymous fictional Internet company. Think Google, Apple, LinkedIn and more rolled into one. The novel follows the central character Mae Holland as she lands her dream job at The Circle and swiftly rises through its ranks. As she does so, the reader is introduced to a range of sophisticated technologies that The Circle has developed including SeeChange: light, portable cameras that can provide real-time video with minimal efforts. Eventually, SeeChange cameras are worn all day long by politicians wishing to be ‘transparent’, allowing the public to see what they are seeing and doing at all times. When I first read The Circle when it came out in 2013, it felt to me like a Brave New World or 1984 for the 21st Century. The book was made into a movie with Tom Hanks and Emma Watson earlier this year. Although the movie was panned by the critics, I still strongly recommend the original Eggers book. It raises fascinating and important issues about what responsibilities Big Tech companies like the fictional The Circle have for the impacts of their products and services – and who gets to decide whether specific products are in society’s interest – or not?

I was reminded of The Circle by the announcement last week that Deep Mind – the British Artificial Intelligence company that was acquired by Google in 2014 for more than $500million, has established a new research unit, DeepMind Ethics & Society, to complement its work in AI science and application. This new unit will “help us explore and understand the real-world impacts of AI. It has a dual aim: to help technologists put ethics into practice, and to help society anticipate and direct the impact of AI so that it works for the benefit of all.” (Why we launched DeepMind Ethics & Society: https://deepmind.com/blog/why-we-launched-deepmind-ethics-society/ )

As the company explains in a blog accompanying the announcement:

“We believe AI can be of extraordinary benefit to the world, but only if held to the highest ethical standards. Technology is not value neutral, and technologists must take responsibility for the ethical and social impact of their work.”

The new unit, DeepMind Ethics & Society, has already hired six researchers with backgrounds in areas such as philosophy, ethics and law, and hopes to expand it to a team of 25 in 2018. Apart from the full-time staff that DeepMind itself will employ, the unit will also be overseen by six “fellows,” independent researchers including Jeff Sachs, the director of Columbia University’s Earth Institute, Nick Bostrom, an Oxford university philosopher and economist Diane Coyle, who will function as a kind of academic peer review board.

Humankind is making amazing advances in many areas of technology which create exciting possibilities for enhancing the quality of our lives and advancing sustainable development. Unsurprisingly, as the former Cranfield Institute of Technology, Cranfield University is involved in many of these new technologies.

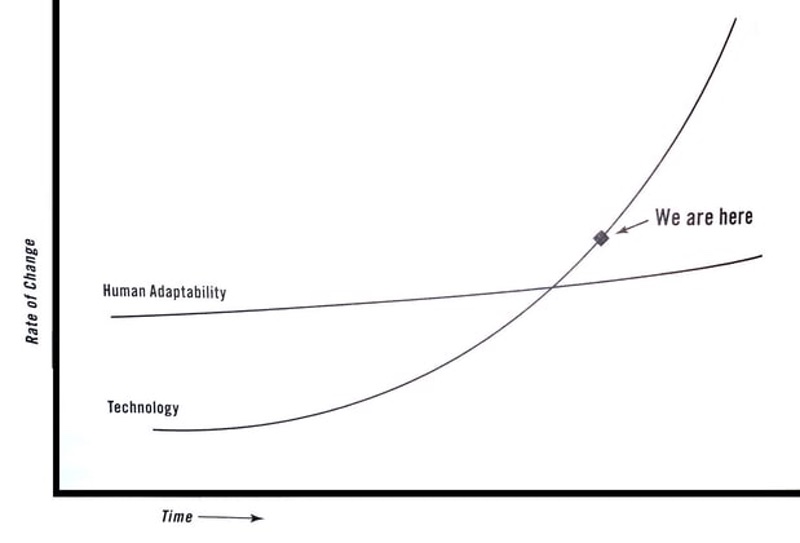

It is crucial, however, that in parallel, society considers the ethical implications of these advances. Thomas Friedman, the Op-Ed writer on The New York Times and author of numerous best-sellers such as The World is Flat and Hot, Flat and Crowded, was widely derided by techies for the graph below from his most recent book Thank you for being late: An Optimist’s Guide to Thriving in the Age of Accelerations. (2016)

(See for example: https://www.gizmodo.com.au/2016/11/this-is-a-real-graph-from-thomas-friedmans-latest-book/ )

Thomas Friedman’s Thank You For Being Late (2016)

On this one, I am with Friedman: there is a very real danger of technology out-pacing humankind’s capacity to process and evaluate the ethics and impacts of the technology. And that can produce popular backlashes. Whatever you may feel about GMO: Genetically Modified Organisms, the failure of Monsanto and the other big Agri-businesses in the mid-1990s to engage seriously with popular and media concerns about GMOs, led to the scares of “Frankenstein foods” and set back the GMO cause in Europe already for two decades.

It is, therefore, in the interests of businesses at the forefront of new technologies to be fully engaged in popular debates about the ethics and the implications of what they are working on. Transparency is not an optional extra – it is a vital prerequisite for a license to operate, let alone for commercial sustainability.

DeepMind, therefore, is well-advised to try to mitigate the risks of popular, media and ultimately regulatory backlash against AI.

Stakeholder Advisory Panels, Chief Ethics Officers, Ethical Screening processes, Social Impacts Research Units, regular Thought-Leadership White Papers and round-tables with critical friends and even hostile critics, will be important for Big Tech.

Already the DeepMind announcement of its Ethics & Society Research Unit has drawn criticism for lack of transparency (see, for example, “DeepMind now has an AI ethics research unit. We have a few questions for it…Tech Crunch Oct 4, 2017 by Natasha Lomas:

At this stage, I would be more cautiously positive, welcoming an initiative to try and create appropriate mechanisms for dealing with the big ethical questions surrounding AI and other major technology breakthroughs. The test, of course, will be whether DeepMind in the future will accept the advice of its new Unit and be willing to abandon lucrative commercial opportunities if advised to do so on ethical grounds. And crucially whether they will tell us if they have done so.

Categories & Tags:

Leave a comment on this post:

You might also like…

From classroom to cockpit: What’s next after Cranfield

The Air Transport Management MSc isn’t just about learning theory — it’s about preparing for a career in the aviation industry. Adit shares his dream job, insights from classmates, and advice for prospective students. ...

Setting up a shared group folder in a reference manager

Many of our students are now busy working on their group projects. One easy way to share references amongst a group is to set up group folders in a reference manager like Mendeley or Zotero. ...

Company codes – CUSIP, SEDOL, ISIN…. What do they mean and how can you use them in our Library resources?

As you use our many finance resources, you will probably notice unique company identifiers which may be codes or symbols. It is worth spending some time getting to know what these are and which resources ...

Supporting careers in defence through specialist education

As a materials engineer by background, I have always been drawn to fields where technical expertise directly shapes real‑world outcomes. Few sectors exemplify this better than defence. Engineering careers in defence sit at the ...

What being a woman in STEM means to me

STEM is both a way of thinking and a practical toolkit. It sharpens reasoning and equips us to turn ideas into solutions with measurable impact. For me, STEM has never been only about acquiring ...

A woman’s experience in environmental science within defence

When I stepped into the gates of the Defence Academy it was the 30th September 2019. I did not know at the time that this would be the beginning of a long journey as ...

Great Blog! i visit your page and find much valuable and useful information. I learned so many things, so Thank you so much.