Exploring safer and smarter airports with the Applied Artificial Intelligence MSc group design project

26/03/2024

Artificial intelligence (AI) technologies have experienced rapid development in recent years, spanning from large language models (LLMs) to generative artificial intelligence (GAI). These cutting-edge advancements have significantly impacted various aspects of our daily lives. However, when integrating these technologies into professional domains like aerospace, transport, and manufacturing, careful design and comprehensive testing are imperative to enhance the safety of human users and facilitate efficient collaboration with machines.

Moreover, a crucial consideration is how these AI technologies can adapt to and revolutionise existing ecosystems within high-value and high-demand infrastructures, such as airports, aircraft, and various advanced mobility systems.

Utilising innovative AI technologies and leveraging the platform advantages of Cranfield University, the MSc Applied Artificial Intelligence course aims to cultivate future leaders in applied AI across diverse engineering domains. Its primary objective is to expedite the development and deployment of reliable AI technologies for safety-critical applications worldwide.

The group design project (GDP) is a problem-based learning module, and the aim of the GDP is for students to design, implement, validate and test real-time AI-based systems to solve real-world problems. The GDP also aims to provide students with the experience of working on a collaborative engineering project, satisfying the requirements of a potential customer and respecting deadlines.

In 2022 and 2023, students enrolled on our MSc in Applied Artificial Intelligence were assigned a stimulating and demanding group design project. The objective was to leverage the applied AI knowledge acquired from their coursework to develop innovative and safer airport products. Working in small teams of six individuals, students were tasked with designing solutions encompassing software and hardware architecture, AI model development and testing, as well as real-world engagement aspects.

The project’s topic was intentionally broad, requiring students to collaborate within their groups to explore and refine specific areas of interest based on their collective expertise and interests. This approach fostered creativity, teamwork, and a deeper understanding of the practical application of AI technologies in real-world scenarios.

Each group was asked to develop real-time AI solutions for smart airports to achieve the following functionalities:

- The system shall be capable of detecting human users and estimating their poses and behaviours based on precise pose detection and tracking.

- The system shall be able to classify different crowd behaviours and clarify the reasons, importance, and feasibilities.

- The AI model should be cross-validated with different metrics in accuracy, computing, and inference.

- The AI model should be able to be implemented in real-time to inform the advantages and disadvantages of current AI technologies in these safety-critical applications.

- The system can rely on different sensor sources as input to enable sensor fusion for robust performance, however, very low-cost but efficient solutions are also welcomed.

Case study 1: Fall detection in an aircraft maintenance environment.

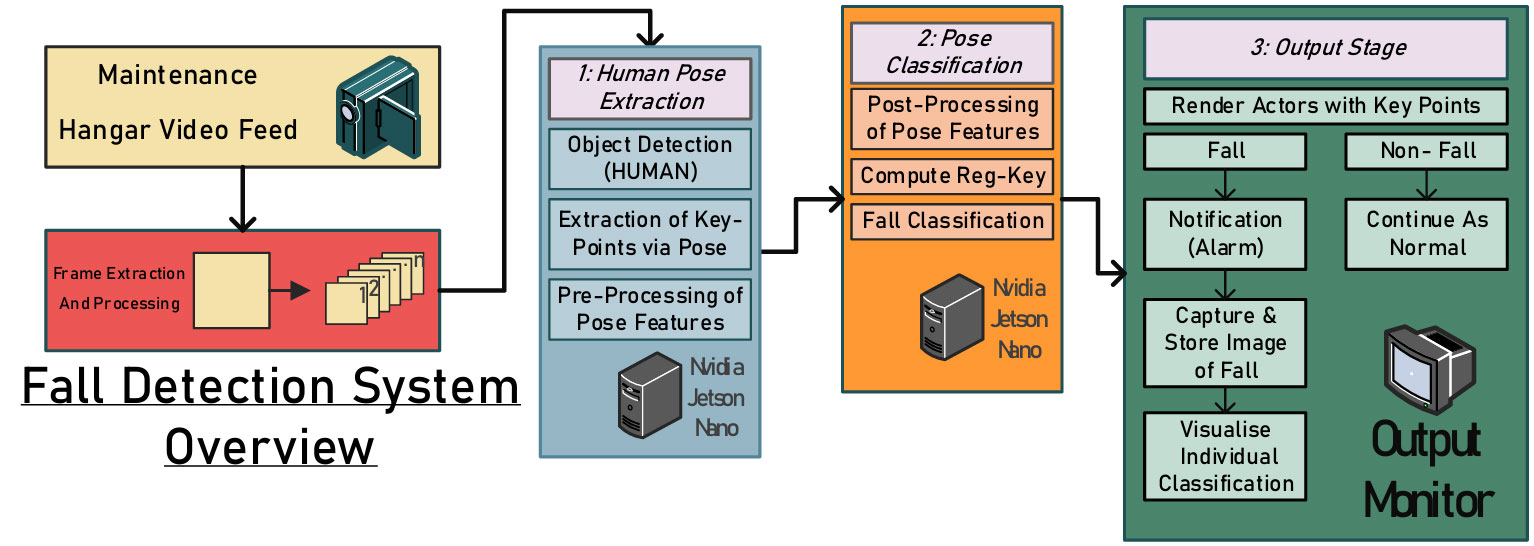

Maintenance environments pose significant hazards, including unattended machinery, inadequate fencing or physical guards near dangerous areas, and cluttered workspaces. Among these risks, fatal falling injuries are alarmingly common. Detecting and reporting non-fatal incidents promptly could prevent further harm or fatalities. Therefore, this work proposes an integrated vision-based system to monitor employees during aircraft maintenance activities, enhancing safety and preventing accidents (see the figure below).

From the initial training and validation results of the designed model, the apparent absence of a ready-made airport hangar maintenance dataset presents a possibility of a bias towards images from videos captured from perpendicular camera angles taken from proximity to the subject. Leveraging the Cranfield advantages, the Cranfield University maintenance hangar was chosen and used for the data collection in this project.

In total, about 50 short (two to five minutes) videos of simulated maintenance activities were recorded, some with falls and others without. The captured videos were stripped into frames and annotated using MoveNet pose estimation software library and vector maps of the subject’s key joint positions were generated. The figure below shows some snapshots of the experimental data.

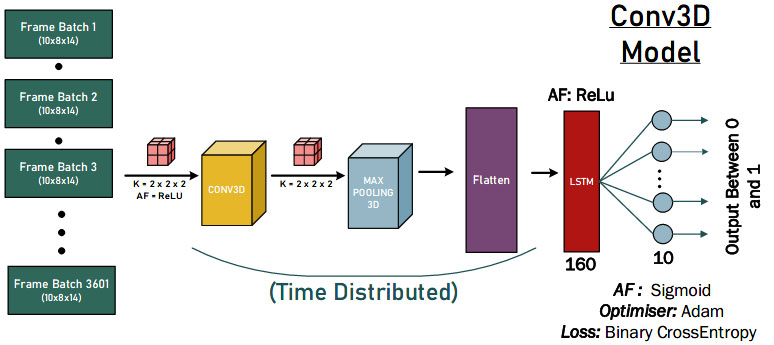

Our students tested the 1-D, 2-D, and 3-D convolutional neural network approaches to quantitatively evaluate the design of the most powerful AI model. The figure below is a demonstration of the 3-D convolution solutions.

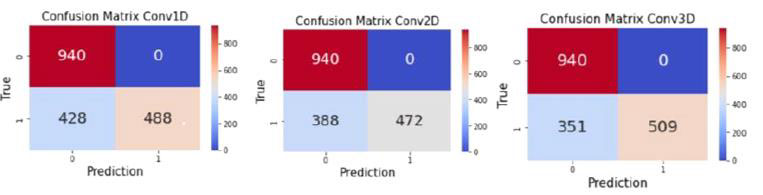

Finally, the proposed AI solutions achieved good detection results for the fall behaviour as shown in the figure below. A few conclusions can be drawn. First, the model had 0 FP classifications which suggests the model does not misclassify a fall. Second, there are 940 true negatives for each model, this could likely be due to each test data containing a portion of non-falls (classified as 0) before the actor falls over.

Case study 2: Detection of vital signs of myocardial infarction using computer vision and edge AI

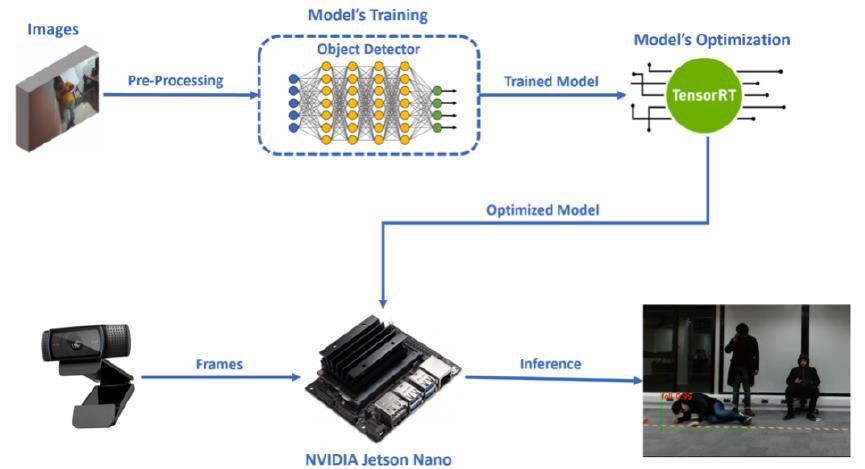

Edge AI refers to the deployment of artificial intelligence applications in devices located across the physical environment. Affordability and ease of use are key factors in the adoption of AI algorithms in situations where end users encounter real-world challenges. In this project, our student proposed a low-cost and lightweight heart attack detection model for rapid response and rescue at the airport. The process is composed of four main stages as shown in the figure below.

The first stage constitutes the appropriate selection and preparation of an image dataset, along with the necessary annotations for the bounding boxes of the classes (chest pain, fall).

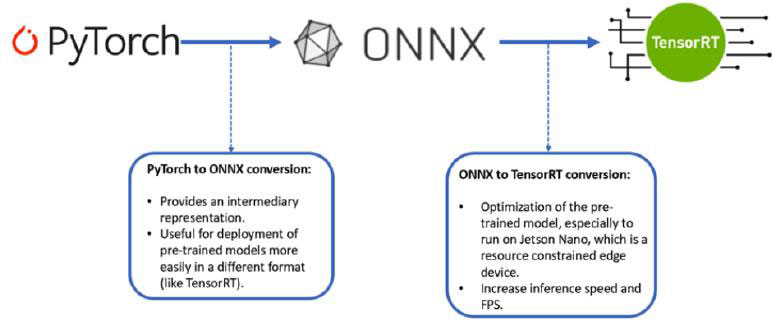

Furthermore, as a second stage comes the training of our object detector model via transfer learning. The specific stage was performed in Google Colab, using PyTorch. Subsequently, after the completion of the training stage, the model was inserted in NVIDIA’s Jetson Nano, which was our chosen embedded device to be used for our Edge AI computer vision application.

The third stage of our system’s design was the model’s appropriate conversion and optimisation, for it to run more efficiently on Jetson Nano. Our model’s optimisation was performed using NVIDIA’s TensorRT inference engine and the specific process was executed in Jetson Nano (as shown in the figure below).

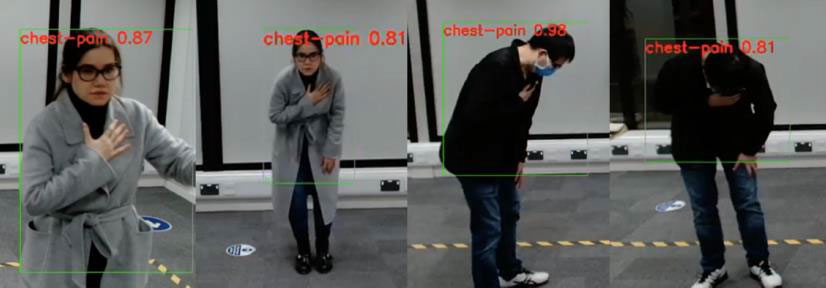

The last step is the optimised model’s execution on Jetson Nano, using as inputs the frames that it receives from a web camera, to perform the real-time object detection process and detect our classes (chest pain, fall). Along with this process, in the inference code that runs on Jetson Nano, there were two specific scenarios. The final inference results are shown in the figure below.

Case study 3: Crowd monitoring and social distancing analysis

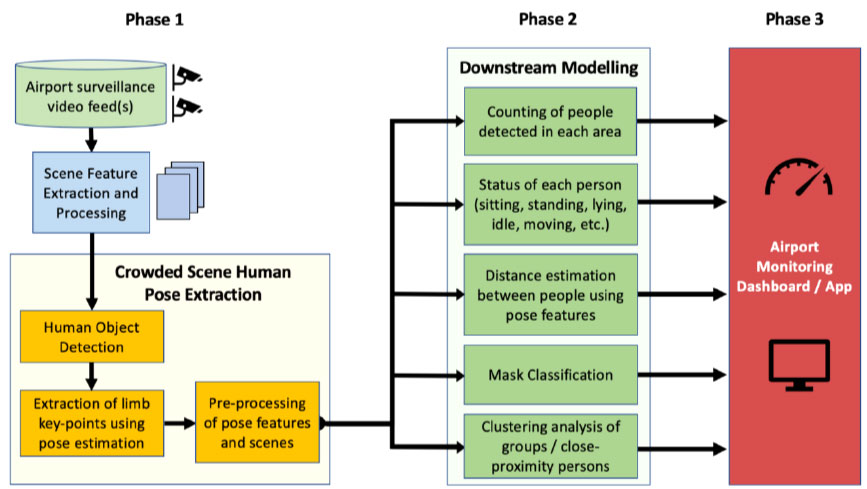

Airports have huge influxes of passenger flows every single day, and similarly to other crowded locations and organisations, they must ensure public safety and ensure adequate measures are implemented to mitigate risks during pandemics. In this project, our students proposed an integrated computer vision-based system that provides multi-functional crowd monitoring and analysis throughout airports. The system outputs are intended to benefit airport management staff and passengers alike, through providing crowd-based analytics and intelligence.

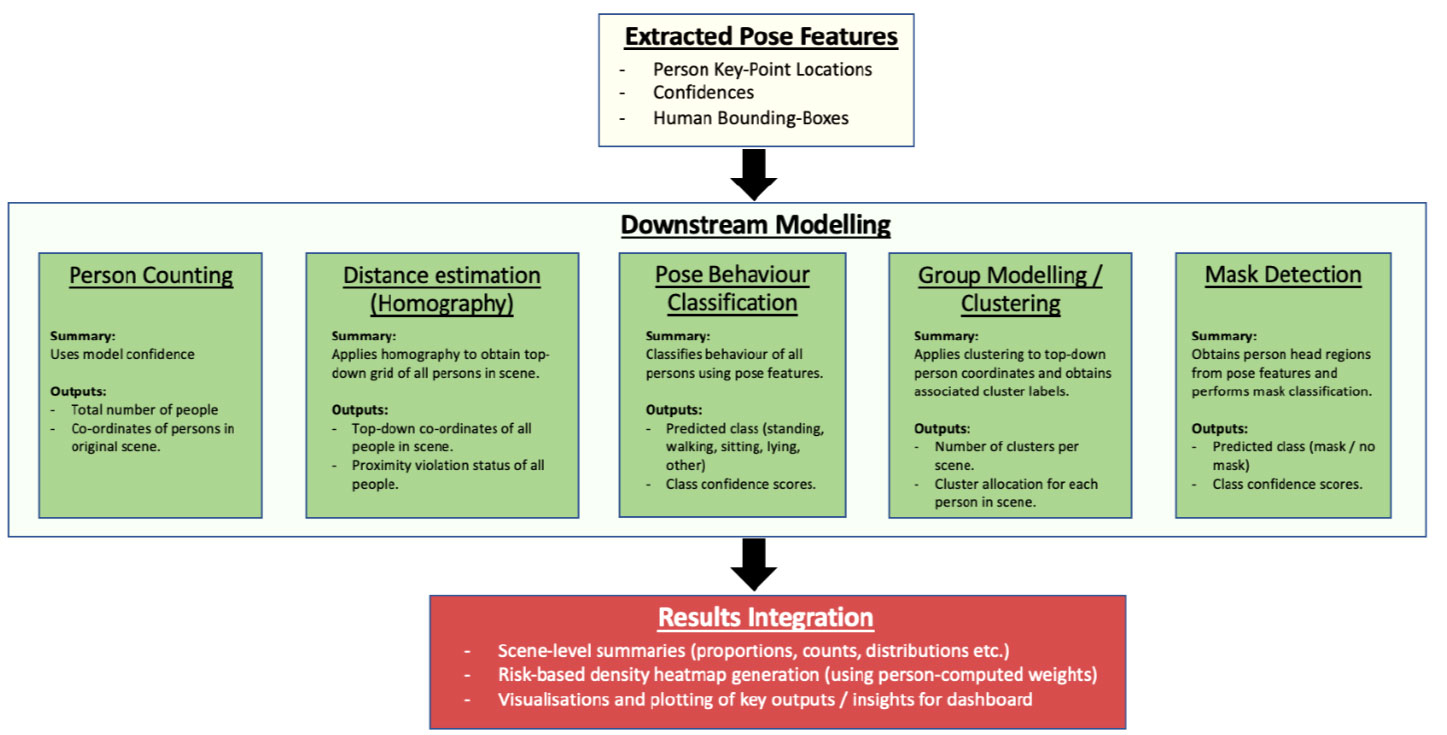

The system consists of an integrated platform (see the figure below) to analyse and monitor crowds in public locations using video surveillance feeds. The focus is specifically on smart airports, but the basic framework is adaptable to any public context where analysing and monitoring crowd characteristics is useful.

The pose features extracted from a scene are used by system downstream models to perform unique tasks. This includes person counting, interpersonal distance estimation, mask object detection, status classification (sitting, standing, walking, lying etc.), and social clustering. The results are then combined to form the integrated dashboard and monitoring system. With exception to the common use of pose features, these tasks represent unique challenges with different modelling approaches. Fortunately, due to the modular system design, it was possible to abstract each task and have different team members develop them.

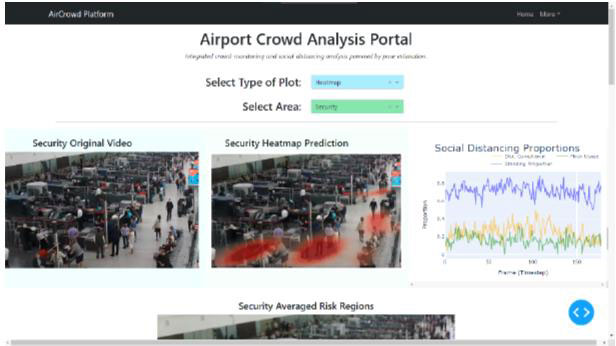

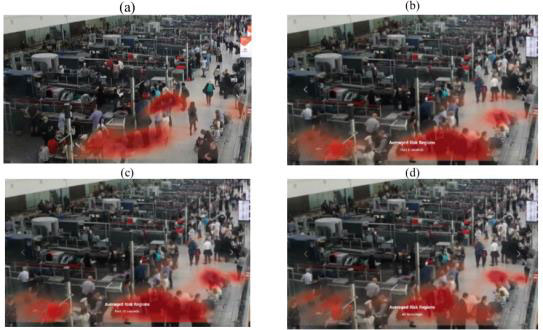

Last, an interactable interface is designed to integrate all the downstream outputs into a single viewport (see the figure below). The app uploads data files created by the downstream models to the dashboard in real time so that analysis of the current state of the scene can be made. At any given time, the original footage of the scene can be viewed, along with the pose features extracted from each person on a video player adjacent to it. The decision maker can switch between a boxplot view to a heatmap view, and subsequently change which footage the data is being received from two drop-down menus. Statistics regarding the scene are displayed on the very right of the viewport. These statistics are total person mask status, total risk profile, total persons pose status, total person count, social distancing proportions and proportions boxplot.

Case study 4: Violence detection at the airport

Last, one of our groups aims to develop a violence detection framework that estimates human poses and classifies violent behaviour in surveillance footage (as shown in the figure below). Instead of directly extracting features from video frames, this framework uses ViTPose to detect human poses in each frame, then pre-processes and extracts features from the key points information.

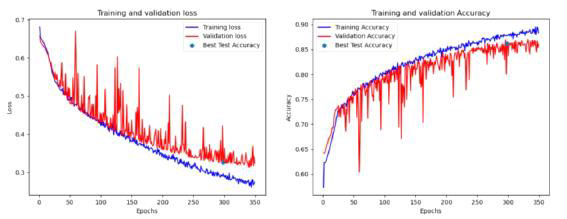

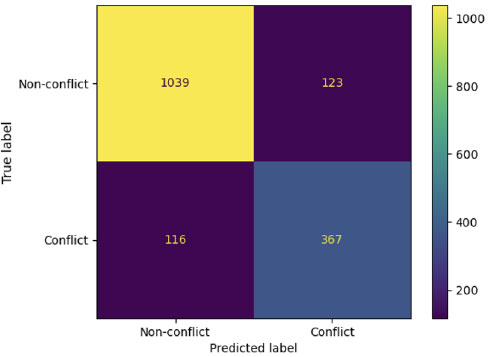

Comprehensive analysis of various models using multiple datasets (angle-based, distance-based, 1-second and 2-seconds of sequences) with a total of 162 hyperparameter combinations, the team finally identified several promising models that meet specific evaluation criteria. One can conclude that models can extract valuable information about violent behaviour using distance features of body key points as shown in the figure below.

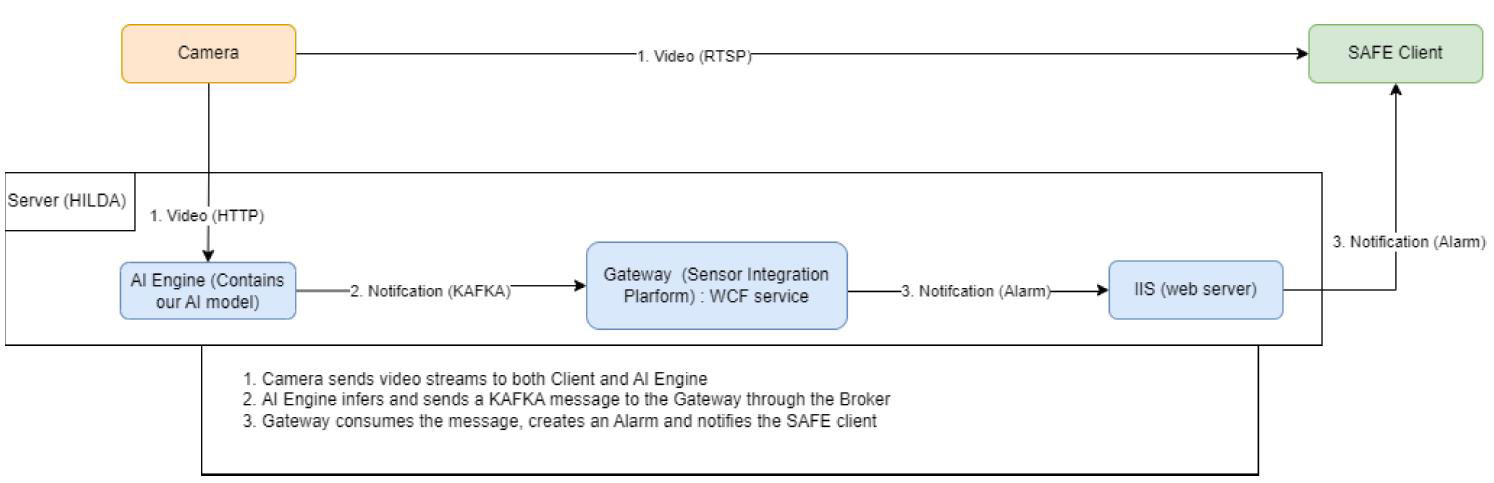

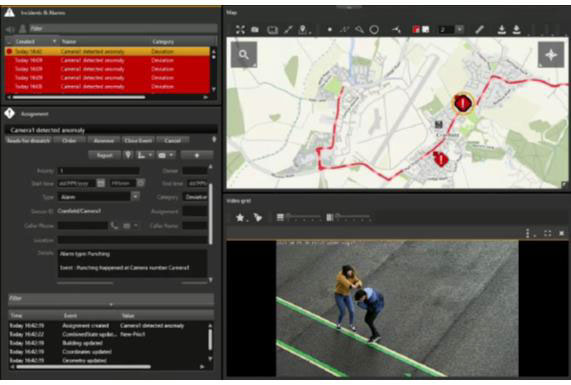

Last, by collaborating with Saab UK, our students can develop and integrate their AI models with the industrial-level platform (SAFE), a powerful situation awareness platform widely used in many UK police stations for surveillance. The KAFKA gateway is applied following the AI engine and forwarded to the client terminal for further display and alerts. If there is any violence detected in the intercepted video with bounding boxes, it will trigger the alarm we configured specifically for our model showing the intercepted video in the SAFE client layout, it will give us the alert message with details. In the end, our students successfully deployed the AI model from our DARTeC centre and communicated with the Saab SAFE system for human situation awareness augmentation.

Creating the applied AI engineers of the future

These are only a few selected samples of interesting GDP projects from the course Applied AI MSc. Recently more challenging GDP projects in explainable interface with AI, causal reasoning for autonomy motion planning, physics-informed AI for autonomous vehicles, and future airspace management have been undertaken by our current students. We believe more exciting research will be delivered by our MSc students soon.

Check the following research publications from our students during the GDP for how interesting the final solutions and results look like:

- Osigbesan, Adeyemi, Solene Barrat, Harkeerat Singh, Dongzi Xia, Siddharth Singh, Yang Xing, Weisi Guo, and Antonios Tsourdos. “Vision-based Fall Detection in Aircraft Maintenance Environment with Pose Estimation.” In 2022 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), pp. 1-6. IEEE, 2022.

- Fraser, Benjamin, Brendan Copp, Gurpreet Singh, Orhan Keyvan, Tongfei Bian, Valentin Sonntag, Yang Xing, Weisi Guo, and Antonios Tsourdos. “Reducing Viral Transmission through AI-based Crowd Monitoring and Social Distancing Analysis.” In 2022 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), pp. 1-6. IEEE, 2022.

- Üstek, İ., Desai, J., Torrecillas, I.L., Abadou, S., Wang, J., Fever, Q., Kasthuri, S.R., Xing, Y., Guo, W. and Tsourdos, A., 2023, August. Two-Stage Violence Detection Using ViTPose and Classification Models at Smart Airports. In 2023 IEEE Smart World Congress (SWC) (pp. 797-802). IEEE.

- Benoit, Paul, Marc Bresson, Yang Xing, Weisi Guo, and Antonios Tsourdos. “Real-Time Vision-Based Violent Actions Detection Through CCTV Cameras With Pose Estimation.” In 2023 IEEE Smart World Congress (SWC), pp. 844-849. IEEE, 2023.

Categories & Tags:

Leave a comment on this post:

You might also like…

From classroom to cockpit: What’s next after Cranfield

The Air Transport Management MSc isn’t just about learning theory — it’s about preparing for a career in the aviation industry. Adit shares his dream job, insights from classmates, and advice for prospective students. ...

Setting up a shared group folder in a reference manager

Many of our students are now busy working on their group projects. One easy way to share references amongst a group is to set up group folders in a reference manager like Mendeley or Zotero. ...

Company codes – CUSIP, SEDOL, ISIN…. What do they mean and how can you use them in our Library resources?

As you use our many finance resources, you will probably notice unique company identifiers which may be codes or symbols. It is worth spending some time getting to know what these are and which resources ...

Supporting careers in defence through specialist education

As a materials engineer by background, I have always been drawn to fields where technical expertise directly shapes real‑world outcomes. Few sectors exemplify this better than defence. Engineering careers in defence sit at the ...

What being a woman in STEM means to me

STEM is both a way of thinking and a practical toolkit. It sharpens reasoning and equips us to turn ideas into solutions with measurable impact. For me, STEM has never been only about acquiring ...

A woman’s experience in environmental science within defence

When I stepped into the gates of the Defence Academy it was the 30th September 2019. I did not know at the time that this would be the beginning of a long journey as ...

Comments are closed.