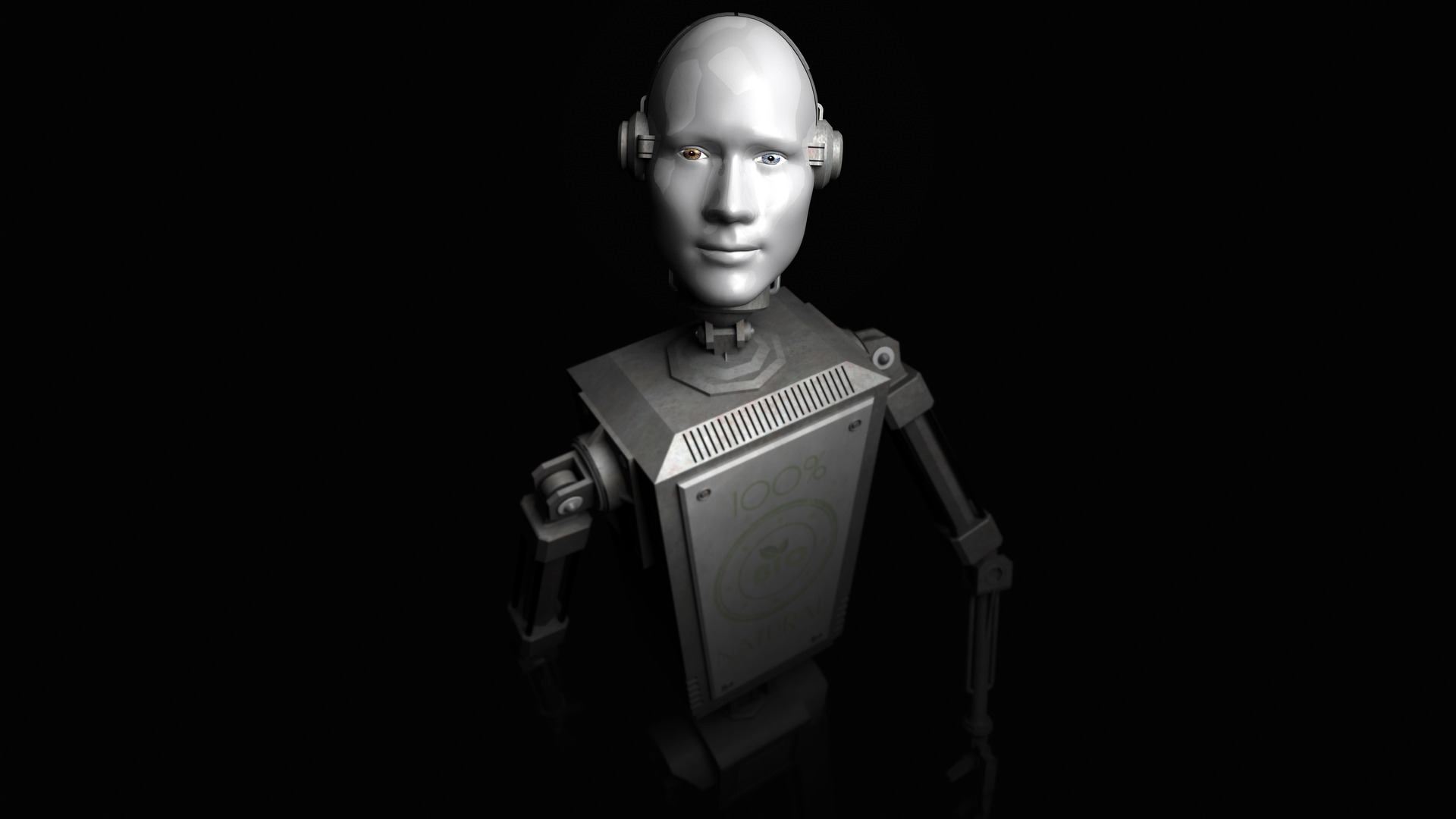

Our relationship with robots is messy and confused

03/01/2018

An animal charity in San Francisco has become a target for much global criticism and local abuse, all because of the behaviour of its most recent recruit: an R2D2-style robot.

The 1.5 metre tall Knightscope security robot trundled around neighbouring car parks and alleyways recording video, stopping to say hello to passers by. But it’s also accused of harassing homeless people living in nearby encampments, who saw visits by the winking bleeping robot as just surveillance from an intruder feeding information to the police.

A social media storm led to calls for acts of retribution, violence and vandalism against the charity. The Knightscope itself was regularly tipped over, once covered in a tarpaulin, smeared with barbecue sauce and even faeces. It’s not the first time there’s been problems in the presence of electronic brains in the real world. A similar robot in another US city inadvertently knocked over a toddler; others have been tipped over by disgruntled office workers.

It’s an example of how robot technologies can provoke extreme reactions. Security was a real issue for the charity. There had been a string of break-ins, incidents of vandalism and evidence of hard drug use that was making staff and visitors feel unsafe, and it seems reasonable the charity should try to make people feel safer. No-one intended to interfere with the lives of homeless people, some of whom found the robot endearing.

New forms of Artificial Intelligence

The introduction of new forms of Artificial Intelligence into people’s everyday lives is one of the biggest challenges facing societies. How far should we let robots walk the same streets, live in our homes, look after relatives, perform surgery, drive our trains and cars? What kinds of roles are acceptable and what are not? And most importantly, who sets the rules for how they behave, and how they decide on priorities?

The potential of robot-enhanced living – in everything from transport to health and social care – and the huge commercial opportunities involved, mean we will become more accepting of robots as they become more familiar and inescapable part of our lives. But that central issue, of what kinds of robots we want and where, needs dealing with now. The debate needs to be shaped as much by ordinary members of the public as by technologists and engineers. We all have a stake in deciding what makes a ‘good’ robot.

Robot ethics

The British Standards Institute published the first standard for the robot ethics in 2016, the BS 8611. But that’s just the start. As part of its work with the BSI’s UK Robot Ethics Group, Cranfield University wants the views of the public on the future of robots in our lives (www.cranfield.ac.uk/robotethics) to develop new standards and inform the work of developers and manufacturers.

Our relationship to AI and to robots is messy and confused. On the one hand there are attacks on robots when there’s any suggestion of potential intrusion. On the other we’re increasingly attached emotionally to our personal technologies, to our personalised phones and tablets, and make pets of robot toys and anything which shows signs of engagement, no matter how limited and fake. We need to be clear-sighted about the future and human-robot relationships and that means debate now before the sheer scale of consumer opportunities and cost-savings make the decisions for us.

—

Find out more about our new Robotics MSc course: https://www.cranfield.ac.uk/courses/taught/robotics

Read more about our work in this area: www.cranfield.ac.uk/robotethics

Categories & Tags:

Leave a comment on this post:

You might also like…

Company codes – CUSIP, SEDOL, ISIN…. What do they mean and how can you use them in our Library resources?

As you use our many finance resources, you will probably notice unique company identifiers which may be codes or symbols. It is worth spending some time getting to know what these are and which resources ...

Supporting careers in defence through specialist education

As a materials engineer by background, I have always been drawn to fields where technical expertise directly shapes real‑world outcomes. Few sectors exemplify this better than defence. Engineering careers in defence sit at the ...

What being a woman in STEM means to me

STEM is both a way of thinking and a practical toolkit. It sharpens reasoning and equips us to turn ideas into solutions with measurable impact. For me, STEM has never been only about acquiring ...

A woman’s experience in environmental science within defence

When I stepped into the gates of the Defence Academy it was the 30th September 2019. I did not know at the time that this would be the beginning of a long journey as ...

Working on your group project? We can help!

When undertaking a group project, typically you'll need to investigate a topic, decide on a methodology for your investigation, gather and collate information and data, share your findings with each other, and then formally report ...

From passion to purpose: My journey at the Pinnacle of Aviation

By: Sultana Yassin Abdi MSc Air Transport Management, Current Student Born and raised in the vibrant landscape of the UAE, with roots stretching back to Somalia, my life has always been ...

Personally I believe the adoption of AI and Robots will no doubt become commonplace in future generations. On a more philosophical footing are we not already human versions of robots performing mundane tasks in the same way at the same times over and over again every day of our lives? We perform many of these tasks unconsciously whilst thinking about more pressing or concerning issues that are more personally connected to our individual circumstances. What if these tasks could be removed, what if healthcare became automated, what if poverty could be eradicated, what if AI and Quantum computing could find cures for disease.

What if Robots and AI could remove the physical function of performing many mundane tasks building more capacity in our unconscious minds which could, over time be evolved and trained to focus on more positive tasks like building a society where we care for each other as a human race and leaving the robots to do the boring stuff. Tax the robots to fund more social support mechanisms, put more focus on the family and caring for our elders, live more creative caring lives that respects our environment and fosters more interaction with each other rather than aspiring to a capitalist regime that is based on a class system feeding off the divide between rich and poor. Rich get richer, poor get poorer. Robots and AI development could be the first step in our species making the next evolutionary leap removing the divisions in society and eradicating the class system which we live under.

Our lives would be much richer if we had more time to enjoy them and realize what is most important to us and we took the time to see the beauty that surrounds us and I have no doubt re-focusing our minds – conscious and unconscious on such things would only benefit society as a whole ensuring our survival as a species.

The BIG question is how willing BIG Business is prepared to adopt solutions that could eventually one day remove the need for them. For example the Pharmaceutical Industry – in a way they control the lives of millions of people and thrive off illness.. if AI and Quantum Computing were to find cures where would they find themselves ?

Just a thought !